Deep Learning based Method for Identifying Pipeline Magnetic Leakage Anomaly Data (Part 2)

2.2 Improved One-dimensional Convolutional Neural Network

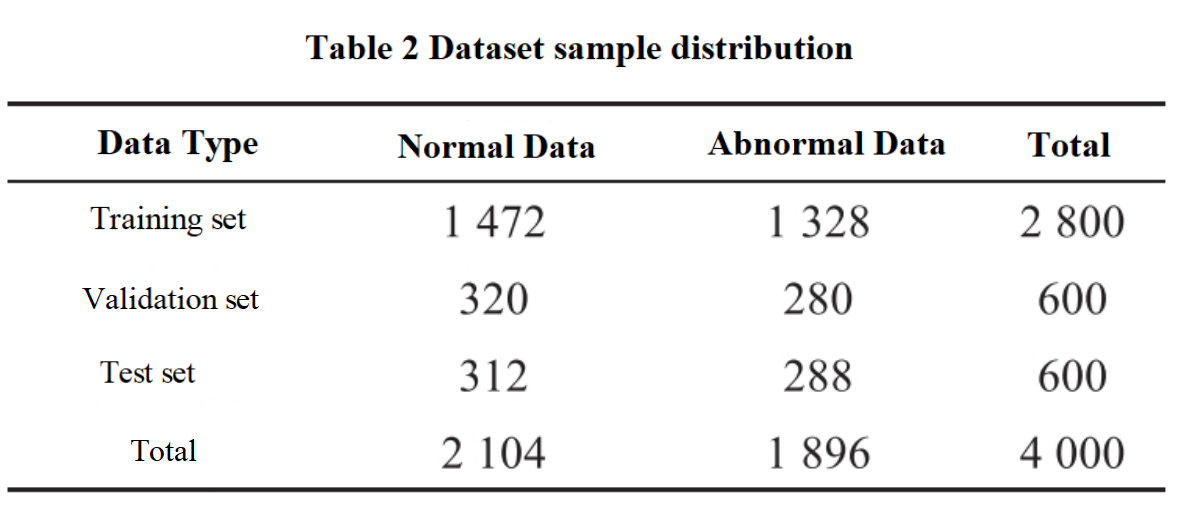

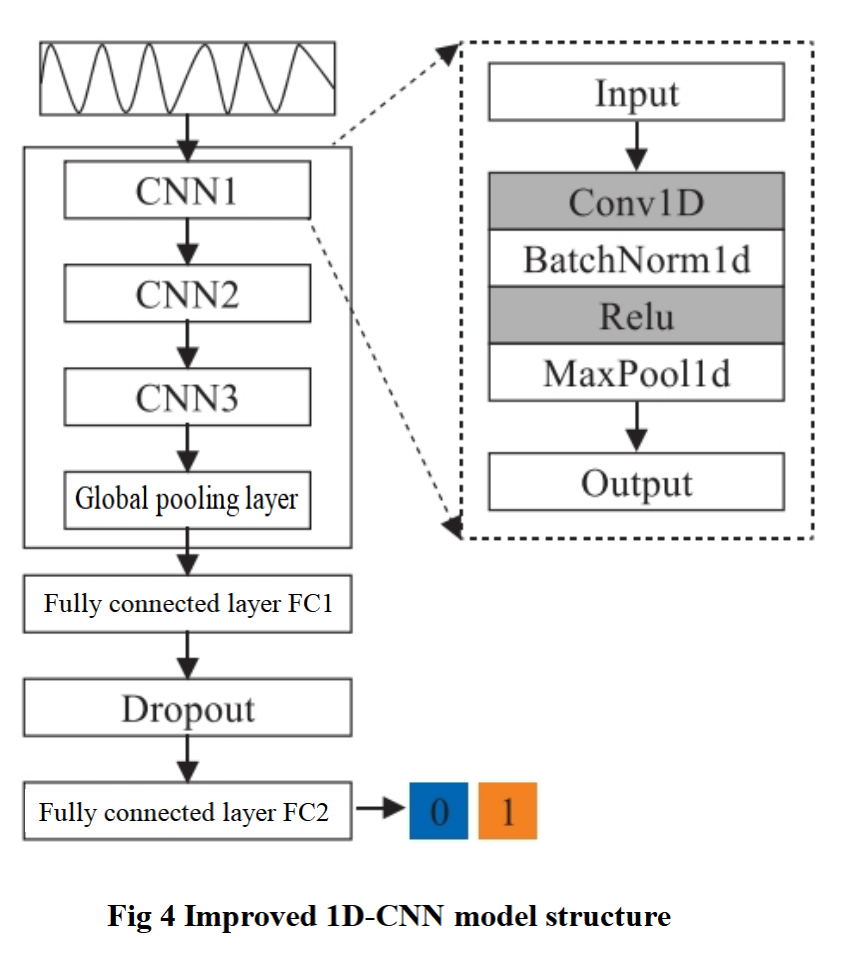

The magnetic leakage detection data of pipelines can be approximated as sequential data based on time distribution.Based on the characteristics of magnetic flux leakage data and the dimensionality of samples in the dataset, a lightweight model based on one-dimensional convolutional network was designed using PyTorch framework to identify pipeline magnetic flux leakage internal detection data.Added batch normalization layer and Dropout method. It mainly includes three convolutional modules, one global pooling layer, and two fully connected layers.Each convolution module consists of one one-dimensional convolutional layer (Conv1D), one one-dimensional batch normalization layer (BatchNormld), an activation function, and one one-dimensional max pooling layer (MaxPool1d) (Figure 4). The parameter settings corresponding to each layer of network structure are shown in Table 1.

Compared to lightweight network models, deeper network architectures have higher potential to extract feature information from data. However, the increase in network depth is not a simple linear superposition process.As the depth increases, the difficulty of optimization will significantly increase. Optimizing the structure for specific levels is more in line with practical application needs and can achieve a better balance between complexity and optimizability.

2.2.1 One-dimensional Convolutional Layer

The function of the convolutional layer is to use convolution operations to obtain the features of input data, and it is the core component of the entire network structure.The one-dimensional convolution operation is similar to the principle of multidimensional convolution, using a convolution kernel to slide along the length direction of the input sequence data, calculate the convolution result at each position, and thus achieve the extraction of input features. The one-dimensional convolution operation is shown in equation (1).

![]()

In the formula: Y [i] is the value of the element at position i after convolution operation, k is the size of the convolution kernel, X [i+j] is the element in the input sample X that is shifted back j positions from position i, W [j] is the weight of the convolution kernel at position j, and b is the bias.Due to the data preprocessing, the leakage magnetic data has been mapped between [0,1]. Therefore, using a linear rectification function (Relu) with nonlinear characteristics can effectively handle the problem of gradient vanishing.

2.2.2 Batch Normalization Layer

Batch normalization is to ensure that each layer of input data has a similar distribution. Although the dataset has been preprocessed before being input into the model, the data distribution may still change due to changes in network parameters.BatchNorm normalizes the input of each layer using mean and standard deviation, reducing sensitivity to initial parameters and helping to accelerate the model training process, making it easier to converge to the ideal state. The batch normalization process is shown in equation (2).

.png)

In the formula, the value obtained is the normalized value of xi, where xi is the i-th element in the input sample, μ is the mean of the input sample data, σ 2 is the variance, θ is a non-zero constant, and λ and β are the scale factor and shift factor, respectively.

2.2.3 Pooling Layer

The pooling layer is used to compress the feature map space size output by the convolutional layer, which helps reduce the number of model parameters.MaxPool1d pooling is used in the convolution module, which preserves the maximum value within each pooling window to obtain the most significant features in each region.The pooling layer between the convolutional module (CNN3) and the fully connected layer FC1 adopts Adaptive AvgPool1d as the global pooling. Adaptive AvgPool1d does not require specific pooling parameters to be specified and can automatically pool the entire feature map according to the target size, preserving the overall feature information and enhancing the model's generalization ability.

2.2.4 Loss Function

Select the CrossEntropy Loss function, as shown in equation (3).

.png)

In the formula, L is the cross entropy loss value, y is the substantive label of the sample, and ŷ is the label predicted by the model.Traditional classification models generally use Softmax as the output layer. Due to the combination of Softmax function in the cross entropy loss function of PyTorch framework, the fully connected layer FC2 can be directly used as the output layer without separately setting Softmax processing.

2.3 Dropout Regularization Method

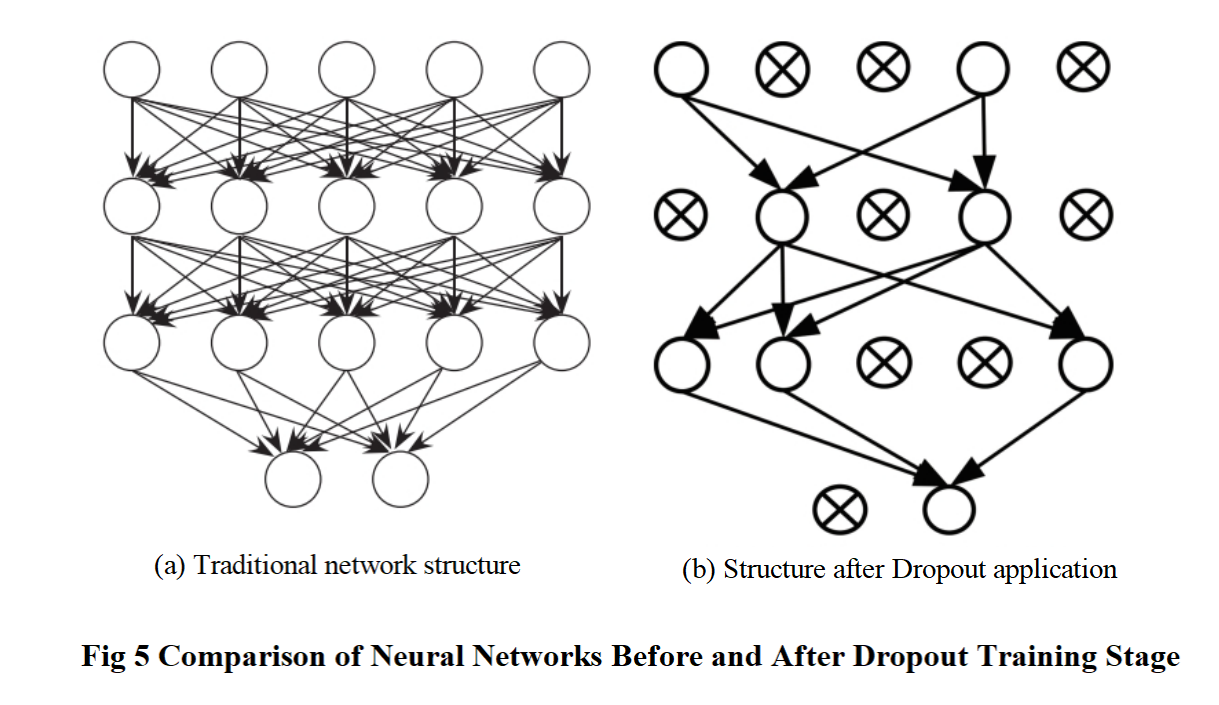

Overfitting is often a major problem with network models, as the model performs very well on the training set but performs poorly on the validation and testing sets.The Dropout method randomly closes neurons by combining the corresponding probability p during the forward propagation process of model training; During backpropagation, closed neurons do not update their weights.Comparison of neural network structures before and after training using the Dropout method (Figure 5), where ⊗ represents closed neurons and ○ represents retained neurons.

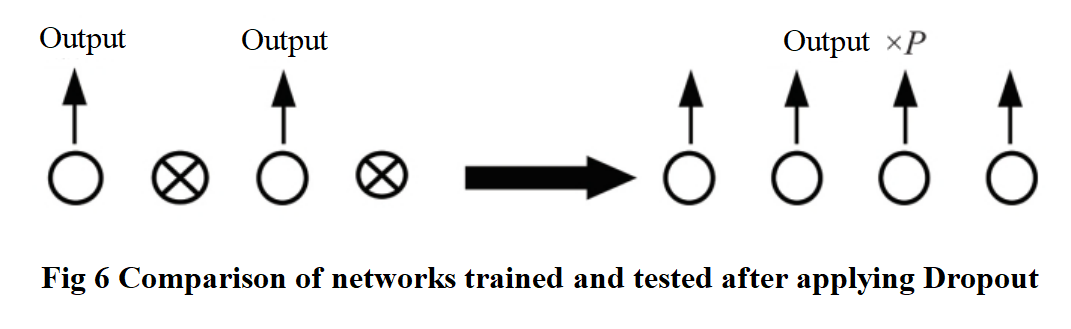

The Dropout method weakens the joint adaptability of neural nodes in the same layer and improves the generalization ability of the model. In the testing phase, the output of each neuron is multiplied by the retention probability p to maintain the expected output, effectively reducing the probability of overfitting (Figure 6).

Due to Dropout randomly discarding neurons, the neurons in convolutional layers often correspond to local features of the input data. Discarding these neurons will reduce the number of effective parameters and may disrupt the parameter sharing property of the convolutional layer, thereby affecting the feature extraction ability of the model. Therefore, the Dropout method is introduced in the fully connected layer.

3. Experiment and Result Analysis

The experimental environment configuration is as follows: the operating system is Windows 10, the programming language is Python 3.9, and the code is written in the PyCharm 2023.2.1 development environment. During the model training process, the batch size is set to 200, the learning rate is set to 0.001, and there are a total of 100 training epochs.

3.1 Establishment of Experimental Dataset

The magnetic leakage data of the pipeline comes from the measured data of the detector inside the pipeline with a diameter of Φ1219. Due to the point by point sampling used by the pipeline leakage magnetic detector, the sequence of sampling length can be arbitrarily truncated.If divided into shorter sequences of sampling points, although it can improve the model training speed and expand the sample size, it will result in too few features that can be extracted between samples.The generation of outliers is often due to changes in the continuous sampling point data of a certain channel. Therefore, the sequence length is fixed at 500 sampling points, that is, each leakage magnetic sample data corresponds to a feature vector of 500 columns in a row.Create a label file and record the file location, signal type, channel information, start sampling point, end sampling point, and label information from left to right.

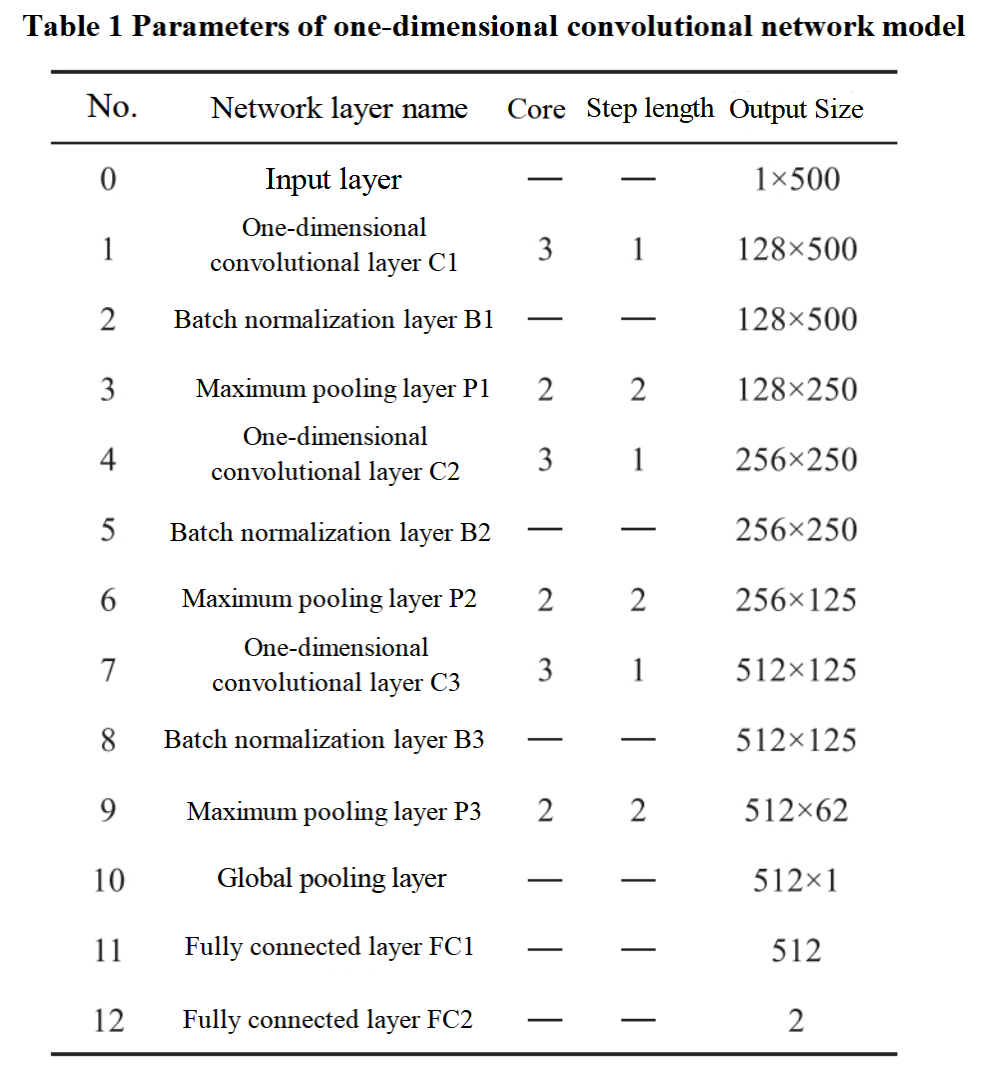

Abnormal samples are marked as 1, and normal samples are marked as 0. A total of 4000 samples are divided to establish a magnetic flux leakage dataset. Divide it into training set, validation set, and test set in a ratio of 7:1.5:1.5 (Table 2), and ensure that the proportion of normal data and abnormal data in each dataset is close to 1:1.In order to prevent overfitting, the proportion distribution of abnormal samples and normal samples in the training set, validation set, and test set is approximately the same, ensuring the uniqueness of the samples.